A Vision-based Deep Learning Platform for Human Motor Activity Recognition

In Proceedings of the 12th International Conference on Modern Circuits and Systems Technologies (MOCAST, To Appear),

Mobina Mobaraki, Anushree Bannadabhavi, Matthew J. Yedlin, and Bhushan Gopaluni

[PDF]

Click to enlarge image.

Abstract

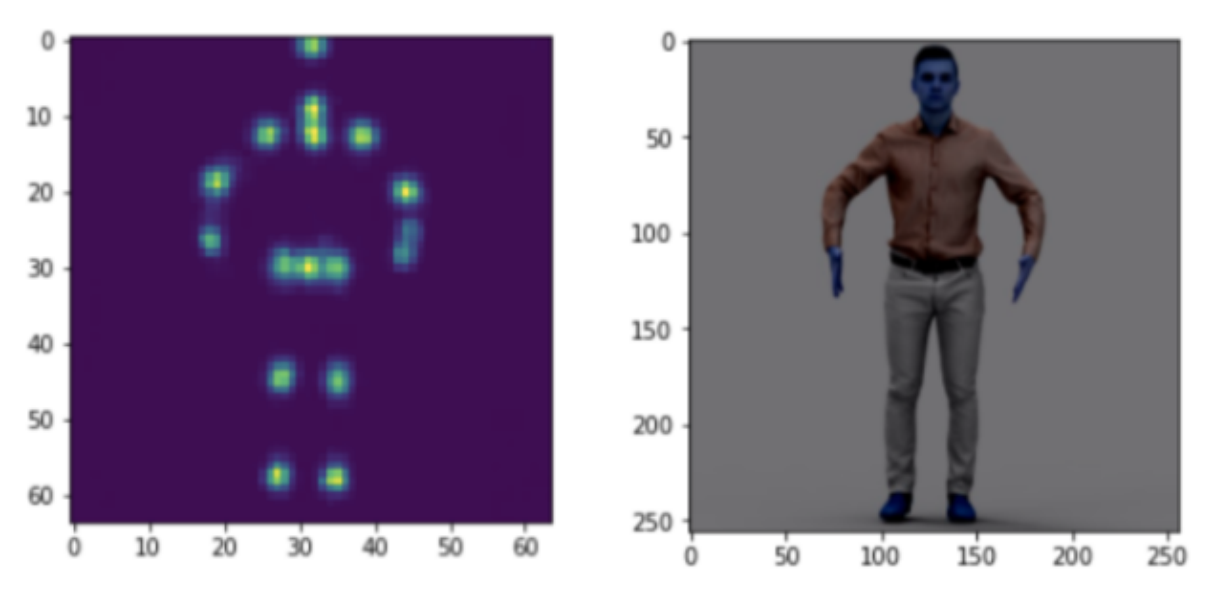

To track the body movement of patients with movement disorders, sensors such as Kinect cameras are not easily accessible. Recently-developed deep learning models, as a subset of Artificial Intelligence (AI), can analyze patients’ behavior from RGB images of smartphones. The Stacked Hourglass model is a novel pose estimation deep learning model which can accurately determine the location of body joints and a long shortterm memory network (LSTM) can determine the corresponding action by analyzing the kinematic behavior of the body joints. This study develops a deep learning model that uses RGB images from the UT-Kinect dataset as input and determines the action performed with 84.14 % accuracy. Specifically, our contributions are: (i) developed the preprocessing pipeline to use stack hourglass model on the UT-kinect dataset (ii) finetuning of the model to handle 20 joints (iii) Added a human action recognition component to accurately classify the actions performed. Our method can be an efficient replacement for the hardly-accessible Kinect cameras and can be used to analyze various diseases with movement disorders.

Read or Download: PDF