Recruitment

We recruit year-round for postdocs, MASc and PhD students, visiting students and undergraduate students. Please see our Opportunities page for more information.

Maintenance-free Controllers

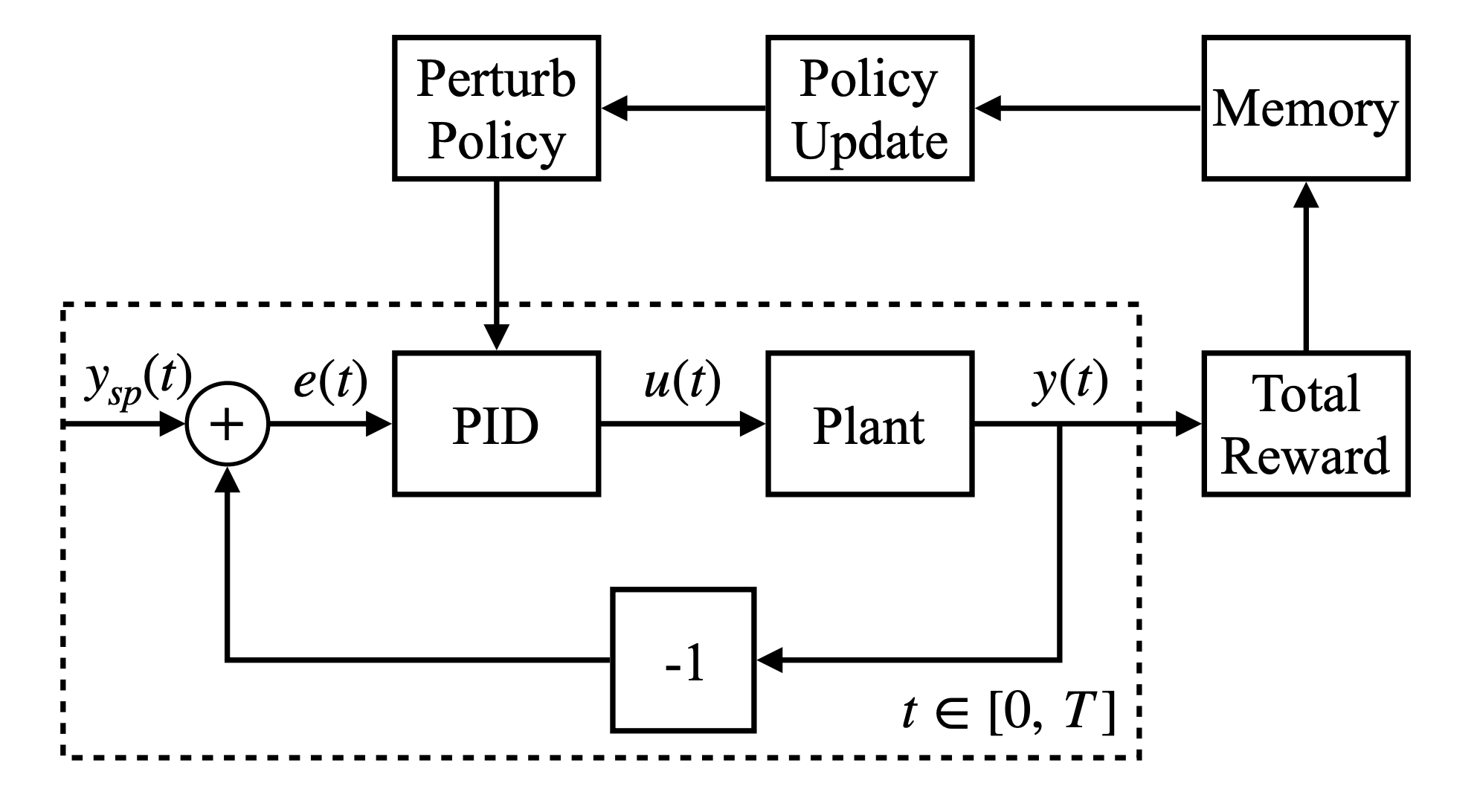

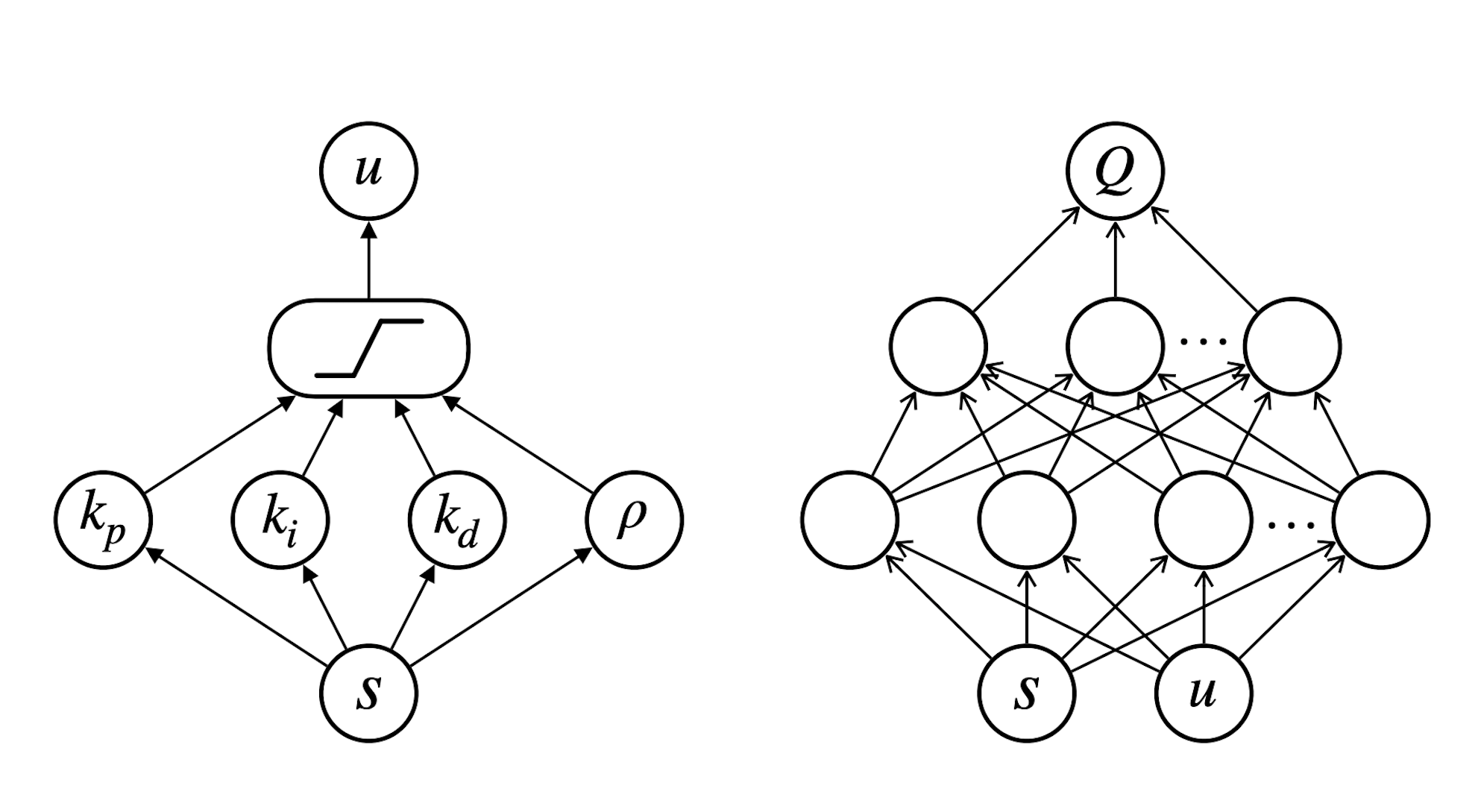

Traditional controllers used in the process industries, such as PID loops or MPC, require constant attention and upkeep for its entire lifecycle including modeling, design, tuning and maintenance. These controllers are typically designed for a narrow operating range by assuming linear process behavior, and are not resilient to changes in plant equipment or operating conditions. In complex industrial systems, there often exists a trade-off between high performance controllers and the development of complex models that are computationally intensive and difficult to interpret or maintain.

Inspired by the recent successes of deep learning in computer vision and natural language processing, our group is exploring deep reinforcement learning (DRL) as a model-free and maintenance-free framework for process control in industrial settings. Recent work that we’ve published shows promising results for DRL in terms of setpoint tracking performance and adaptability, but there are still many fundamental questions left to explore, including sample efficiency (big data is not always good data), stability guarantees, interpretability and computational challenges.

Ultimately, we are interested in the development of smart plants and advanced controllers that can provide a high level of safety and reliability for the industry.

See below for a selection of our papers related to deep (reinforcement) learning.